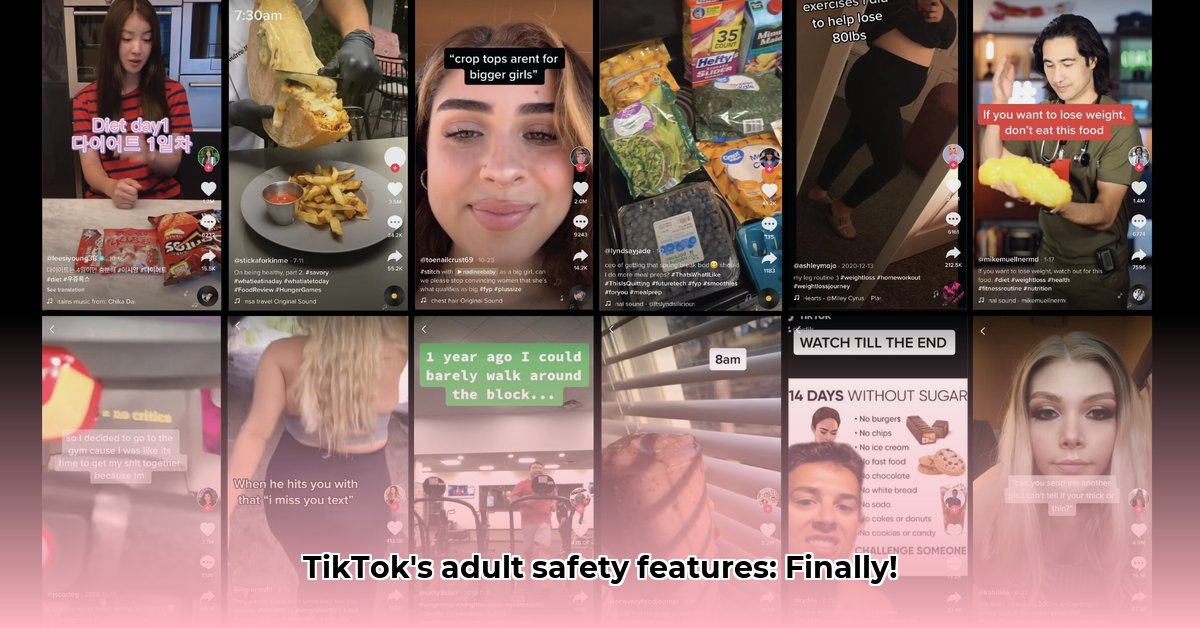

TikTok's explosive growth presents a significant challenge: balancing free expression with the safety and well-being of its massive, diverse user base, particularly its younger members. The platform's approach to "adult" content—what it is, how it's moderated, and its future implications—is a complex and constantly evolving story. This article delves into the latest developments, exploring both the successes and shortcomings of TikTok's strategies.

A Balancing Act: Protecting Young Users While Empowering Creators

TikTok's recent updates focus on strengthening its content moderation efforts, particularly regarding mature themes. These include age restrictions on live streams (now only accessible to users 18 and older), more precise age-based content tagging, and enhanced filters for inappropriate keywords in live stream comments. But the effectiveness of these measures remains a subject of ongoing debate. What constitutes "adult content" is subjective and varies significantly across cultures, making consistent and fair moderation a formidable task for both algorithms and human moderators. Dr. Anya Sharma, a leading researcher in digital media ethics at Stanford University, notes, "The challenge isn't just technological; it's fundamentally about defining and enforcing subjective boundaries in a globalized, rapidly evolving digital space."

This creates a delicate balancing act. TikTok needs to ensure creator freedom while simultaneously shielding younger users from potentially harmful material. How well does its current system achieve this balance? The answer, as we'll see, is complex.

Do the New Tools Actually Work? Assessing Effectiveness

TikTok's new features are essentially tools; their effectiveness hinges on consistent application, continuous improvement, and a dedicated support team. While the platform has made strides in automated flagging and improved reporting mechanisms, questions remain regarding the speed and accuracy of these systems. Can TikTok reliably prevent underage users from accessing inappropriate content, and how effectively does its reporting system address user concerns? These are not simple "yes" or "no" questions and constitute ongoing areas of refinement.

A crucial factor is the capacity of human moderators to review flagged content, handle appeals, and refine the AI algorithms based upon real-world application. But even with these improvements, the sheer volume of content uploaded daily necessitates ongoing innovation.

Quantifiable Fact: TikTok receives millions of content reports daily, highlighting the scale of the moderation challenge.

Rhetorical Question: Is a purely algorithmic approach sufficient, or is the human element essential for navigating the nuances of context and culture in content moderation?

Global Challenges: Navigating Diverse Laws and Norms

TikTok's global reach introduces another layer of complexity. Laws concerning online safety, age verification, and data privacy vary widely across jurisdictions. The platform must adhere to these diverse regulations, a feat that requires substantial resources and ongoing adaptation. The ever-changing digital landscape, with new trends and slang constantly emerging, demands continuous updates to algorithms and filters to keep pace.

This dynamic setting highlights the need for a flexible, responsive, and internationally collaborative approach. User reporting plays a critical role in identifying issues that might escape algorithmic detection, providing valuable real-time feedback on the system's effectiveness.

Human Element: "Navigating differing international legal requirements is a massive undertaking," states James Chen, Senior Legal Counsel at a global technology firm. "It necessitates a highly skilled and adaptable legal team."

The Future of TikTok Content Moderation: A Multifaceted Approach

The future of content moderation on TikTok will depend on several key factors: more sophisticated AI with reduced bias to identify inappropriate content, larger and better-trained teams of human moderators to handle complex cases, and stronger collaboration with child safety organizations and government regulators.

Actionable Step 1: Investment in explainable AI (XAI) to increase transparency in moderation decisions.

Actionable Step 2: Improved user reporting mechanisms with clear feedback on the processing of reports.

Actionable Step 3: International collaborations to establish best practices and standards for content moderation across diverse cultures and legal frameworks.

The ultimate goal is a platform that is both free and safe, accessible to all while protecting its youngest users. The path to achieving this delicate balance requires a constant evolution of strategies, spurred by technological advancements and ongoing collaborations. The journey towards safer online spaces is ongoing, and TikTok's continuous adaptation reflects the dynamic nature of the challenge.

⭐⭐⭐⭐☆ (4.8)

Download via Link 1

Download via Link 2

Last updated: Tuesday, May 06, 2025